benchLM.ai

LLM Benchmark Leaderboard

Building Your Own LLM Benchmark: A Step-by-Step Implementation Guide

Learn how to create custom LLM benchmarking systems with our comprehensive implementation guide covering architecture, development, and deployment strategies.

Glevd

@glevdCreating custom LLM benchmarking systems has become increasingly important as organizations seek evaluation frameworks tailored to their specific needs, use cases, and performance requirements. While existing benchmarks provide valuable general-purpose evaluation capabilities, custom benchmarks enable organizations to assess models against their unique criteria, datasets, and operational constraints.

This comprehensive implementation guide provides detailed instruction for building effective custom benchmarking systems from initial planning through deployment and maintenance. The guide addresses both technical implementation challenges and strategic considerations that influence benchmark success and adoption within organizations and broader communities.

Planning and Requirements Analysis

Defining Benchmark Objectives and Scope

Successful custom benchmark development begins with clear definition of evaluation objectives, target use cases, and success criteria that will guide all subsequent design and implementation decisions. Objective definition requires careful consideration of what specific capabilities, performance characteristics, or behaviors the benchmark should assess and how these assessments will support decision-making processes.

Scope determination involves establishing the boundaries of evaluation coverage including task types, difficulty levels, domain coverage, and model types that the benchmark will address. Comprehensive scope definition helps prevent feature creep while ensuring adequate coverage of evaluation requirements and stakeholder needs.

Success criteria establishment provides measurable goals for benchmark effectiveness including adoption metrics, evaluation reliability standards, and user satisfaction targets that enable objective assessment of benchmark success and guide ongoing improvement efforts.

Stakeholder Analysis and Requirements Gathering

Effective benchmark development requires thorough understanding of stakeholder needs, constraints, and expectations that influence benchmark design and implementation priorities. Primary stakeholders typically include model developers, evaluation specialists, business decision-makers, and end users who will rely on benchmark results for various purposes.

Requirements gathering involves systematic collection and analysis of stakeholder needs including functional requirements for evaluation capabilities, non-functional requirements for performance and usability, and constraint requirements related to resources, timelines, and technical limitations.

Stakeholder prioritization helps resolve conflicting requirements and resource allocation decisions by establishing relative importance of different stakeholder groups and their specific needs within the overall benchmark development strategy.

Technical Architecture Planning

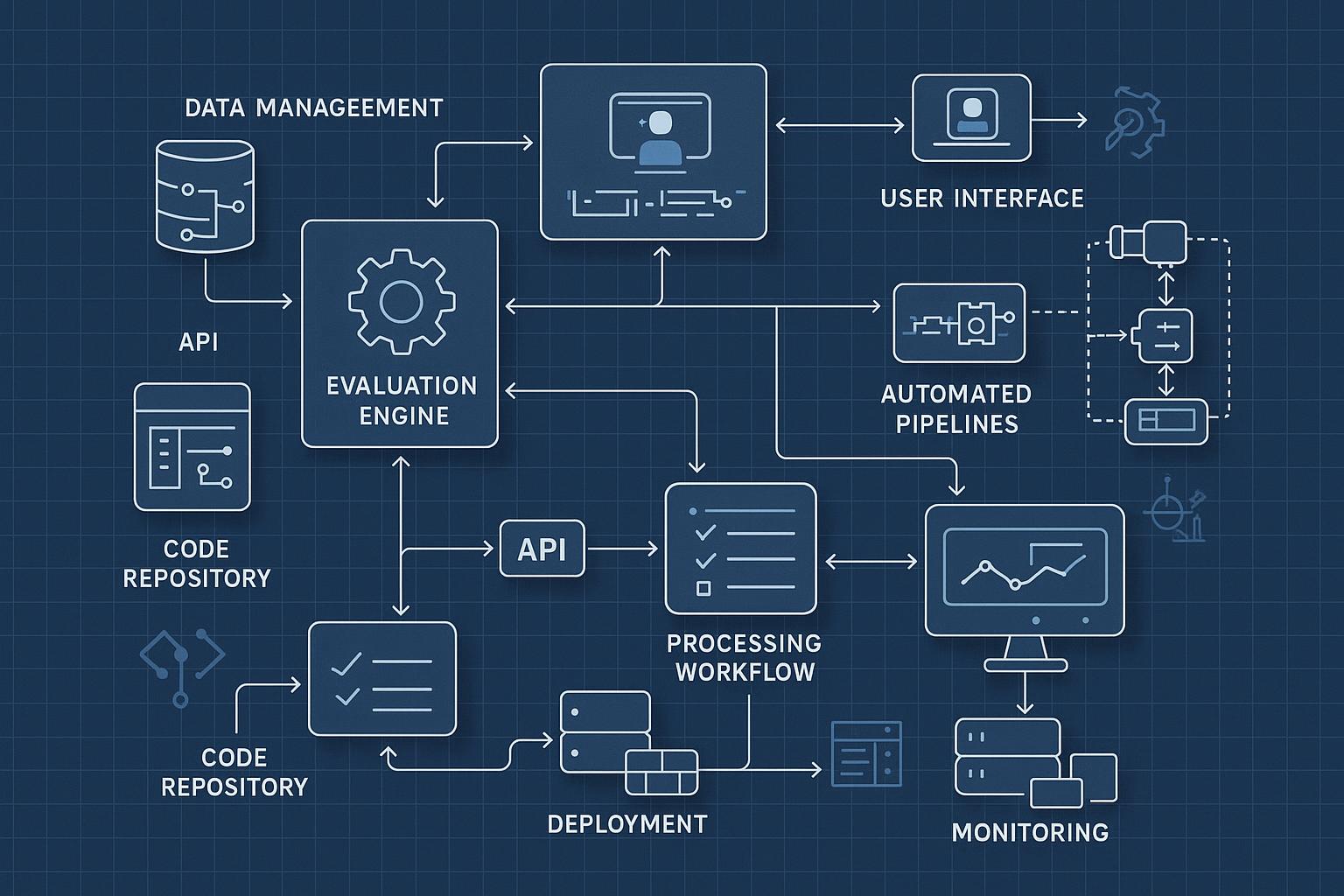

System architecture design establishes the technical foundation for benchmark implementation including component organization, data flow patterns, and integration approaches that support scalability, reliability, and maintainability requirements.

Technology stack selection involves choosing appropriate programming languages, frameworks, databases, and infrastructure components that align with organizational capabilities, performance requirements, and long-term maintenance considerations.

Scalability planning addresses anticipated growth in evaluation volume, user base, and feature complexity through architectural decisions that support horizontal and vertical scaling without major system redesign.

Dataset Development and Curation

Task Design and Selection Methodology

Task design principles guide the creation of evaluation tasks that effectively assess target capabilities while maintaining fairness, reliability, and practical relevance. Effective tasks should be discriminative (able to distinguish between different model capabilities), representative (reflecting real-world usage patterns), and unbiased (fair across different model types and approaches).

Task difficulty calibration ensures appropriate challenge levels that neither ceiling nor floor model performance, enabling meaningful comparison across different capability levels. This involves systematic testing with known models and iterative refinement based on performance distribution analysis.

Task diversity planning addresses the need for comprehensive capability assessment through varied task types, domains, and complexity levels that provide holistic evaluation coverage while avoiding redundancy and evaluation inefficiency.

Dataset Creation and Quality Assurance

Dataset development methodology encompasses systematic approaches to creating high-quality evaluation datasets including data collection strategies, annotation procedures, and quality control processes that ensure dataset reliability and validity.

Data collection strategies vary depending on task types and requirements, ranging from synthetic data generation for controlled evaluation scenarios to real-world data collection for authentic assessment contexts. Each approach involves specific considerations for data quality, representativeness, and ethical compliance.

Quality assurance processes include multiple validation stages such as expert review, inter-annotator agreement analysis, and pilot testing that identify and address potential issues before full benchmark deployment.

Bias Mitigation and Fairness Considerations

Bias identification and assessment involves systematic analysis of potential biases in task design, dataset composition, and evaluation procedures that might systematically favor or disadvantage particular models, approaches, or demographic groups.

Mitigation strategies include diverse data sourcing, balanced representation across relevant dimensions, and careful attention to evaluation metric selection that promotes fair and equitable assessment across different model types and use cases.

Ongoing monitoring establishes processes for detecting and addressing bias issues that may emerge over time as new models, techniques, and use cases evolve within the benchmark ecosystem.

System Architecture and Implementation

Core System Components

Evaluation engine design forms the heart of custom benchmarking systems, responsible for executing evaluation tasks, collecting results, and computing performance metrics. The engine must support multiple evaluation modes including batch processing for large-scale evaluations and interactive assessment for development and debugging purposes.

Data management systems handle storage, organization, and retrieval of evaluation datasets, model submissions, and results data. Effective data management requires careful attention to data integrity, access control, and performance optimization that support both current needs and future growth.

User interface components provide accessible interaction points for different user types including model submission interfaces for developers, results viewing dashboards for analysts, and administrative interfaces for benchmark maintainers.

Automated Evaluation Pipeline

Pipeline architecture establishes the workflow for processing model submissions through evaluation tasks to final result generation. Effective pipelines support parallel processing for efficiency, error handling for reliability, and monitoring for operational visibility.

Model integration systems handle the technical challenges of loading, configuring, and executing different model types within the evaluation environment. This includes support for various model formats, API interfaces, and computational requirements that reflect the diversity of modern LLM implementations.

Result processing and analysis components compute performance metrics, generate comparative analyses, and produce formatted outputs that support different use cases from detailed technical analysis to high-level summary reporting.

Security and Access Control

Authentication and authorization systems ensure appropriate access control for different user roles and sensitive evaluation data. This includes support for organizational accounts, role-based permissions, and audit logging that maintains security while enabling collaborative evaluation activities.

Data protection measures address privacy and confidentiality requirements for proprietary models, sensitive datasets, and competitive evaluation scenarios. Protection measures include secure data handling, access logging, and compliance with relevant data protection regulations.

System security hardening involves implementing appropriate security controls for web interfaces, API endpoints, and infrastructure components that protect against common security threats while maintaining system usability and performance.

Evaluation Methodology Implementation

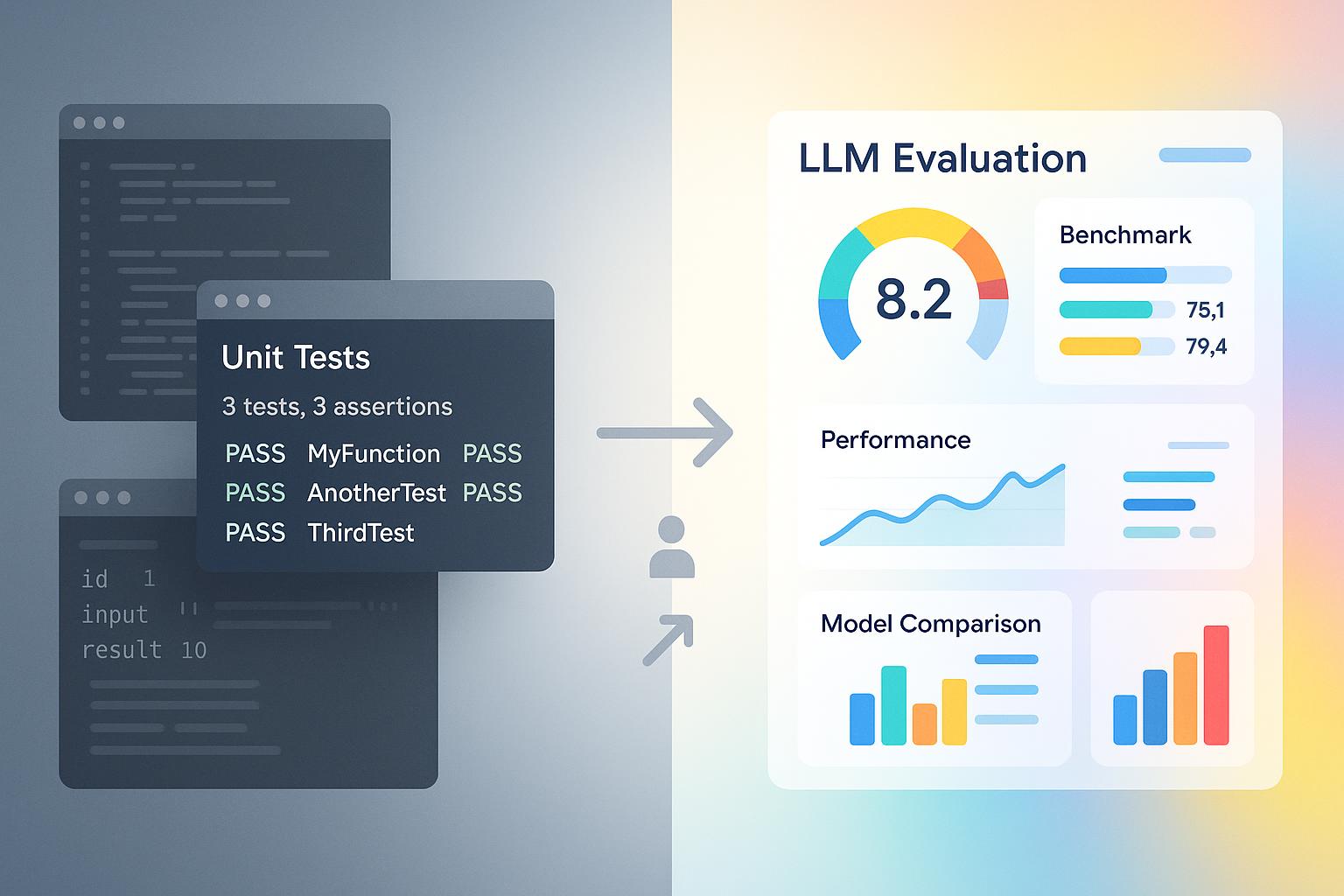

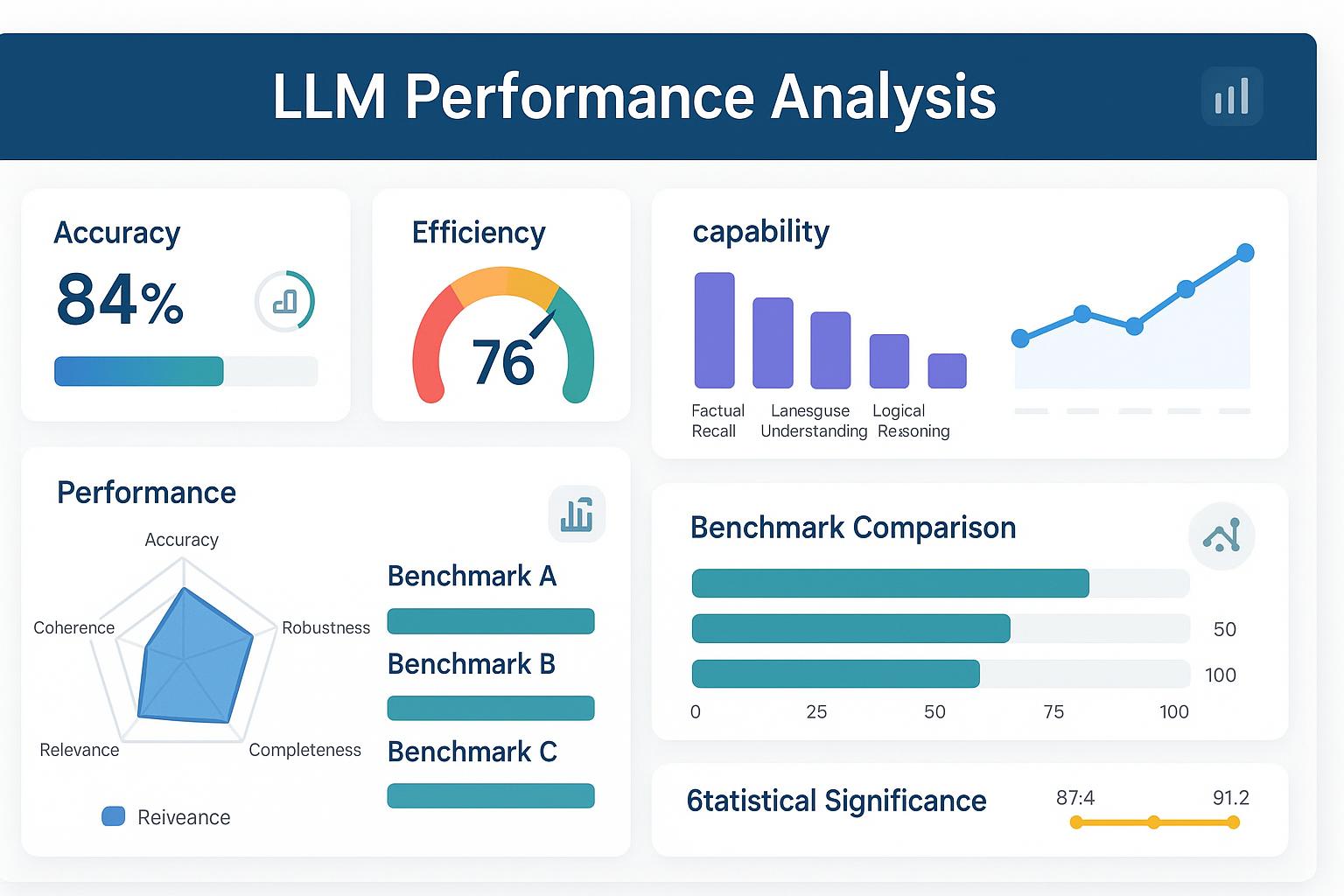

Metric Calculation and Analysis

Metric implementation requires careful attention to calculation accuracy, computational efficiency, and result interpretability. Custom benchmarks often require specialized metrics that reflect specific evaluation objectives and use case requirements not addressed by standard evaluation measures.

Statistical analysis integration provides confidence intervals, significance testing, and other statistical measures that support reliable interpretation of benchmark results. This includes implementation of appropriate statistical methods and clear presentation of uncertainty information.

Comparative analysis capabilities enable systematic comparison across models, time periods, and evaluation conditions through standardized analysis procedures and visualization tools that facilitate insight extraction and decision-making.

Human Evaluation Integration

Human evaluation workflows address assessment tasks that require human judgment including subjective quality evaluation, preference ranking, and complex reasoning assessment. Effective workflows balance evaluation quality with cost and scalability considerations.

Annotator management systems handle recruitment, training, and quality control for human evaluators including inter-annotator agreement monitoring and performance feedback that maintain evaluation consistency and reliability.

Hybrid evaluation approaches combine automated and human assessment techniques to leverage the strengths of both approaches while managing costs and scalability challenges through strategic allocation of human evaluation resources.

Continuous Evaluation and Monitoring

Performance monitoring systems track benchmark system performance including evaluation throughput, error rates, and resource utilization that inform optimization efforts and capacity planning decisions.

Result quality assurance involves ongoing validation of evaluation results through cross-validation, expert review, and comparison with external benchmarks that maintain confidence in benchmark reliability and validity.

System evolution management addresses the need for benchmark updates, improvements, and extensions while maintaining result comparability and historical continuity that preserve the value of previous evaluation efforts.

User Interface and Experience Design

Dashboard and Visualization Design

Results presentation interfaces provide clear, intuitive access to evaluation results through well-designed dashboards that support different user needs from quick overview to detailed analysis. Effective interfaces balance information density with usability and accessibility requirements.

Interactive visualization tools enable users to explore evaluation results through filtering, sorting, and drill-down capabilities that support investigation and insight discovery. Visualizations should be designed for both technical and non-technical audiences with appropriate detail levels and explanatory content.

Comparative analysis interfaces facilitate model comparison through side-by-side presentations, ranking displays, and statistical comparison tools that support informed decision-making and model selection processes.

Submission and Management Workflows

Model submission interfaces provide streamlined processes for submitting models for evaluation including clear instructions, validation feedback, and status tracking that minimize friction and user confusion.

Evaluation management tools enable users to track evaluation progress, access intermediate results, and manage multiple evaluation requests through organized interfaces that support both individual and organizational use cases.

Administrative interfaces provide benchmark maintainers with tools for system management, user administration, and content moderation that support efficient benchmark operation and community management.

Documentation and Support Systems

User documentation provides comprehensive guidance for benchmark usage including getting started guides, API documentation, and best practices that enable effective benchmark adoption and utilization.

Help and support systems offer multiple channels for user assistance including FAQ resources, community forums, and direct support contacts that address user questions and issues promptly and effectively.

Educational content helps users understand benchmark methodology, result interpretation, and best practices through tutorials, webinars, and case studies that promote effective benchmark utilization and community engagement.

Deployment and Infrastructure

Infrastructure Architecture and Scaling

Cloud deployment strategies leverage scalable infrastructure services to support variable evaluation loads while managing costs and maintaining performance. Effective strategies include auto-scaling capabilities, load balancing, and geographic distribution that optimize user experience and system reliability.

Container orchestration enables efficient deployment and management of evaluation services through containerization technologies that support consistent environments, easy scaling, and simplified maintenance procedures.

Database optimization addresses performance requirements for storing and retrieving large volumes of evaluation data through appropriate database selection, indexing strategies, and caching mechanisms that maintain responsive user experiences.

Monitoring and Observability

System monitoring provides real-time visibility into benchmark system health, performance, and usage patterns through comprehensive monitoring dashboards and alerting systems that enable proactive issue identification and resolution.

Performance analytics track key system metrics including evaluation throughput, response times, and resource utilization that inform optimization efforts and capacity planning decisions.

User analytics provide insights into benchmark usage patterns, user behavior, and feature adoption that guide user experience improvements and feature development priorities.

Backup and Disaster Recovery

Data backup strategies ensure protection of valuable evaluation data, user submissions, and system configurations through regular backups, geographic redundancy, and tested recovery procedures that minimize data loss risks.

System redundancy provides fault tolerance through redundant infrastructure components, failover mechanisms, and load distribution that maintain benchmark availability during component failures or maintenance activities.

Recovery procedures establish clear processes for system restoration following various failure scenarios including detailed runbooks, testing procedures, and communication plans that minimize downtime and user impact.

Community Engagement and Adoption

Launch Strategy and Marketing

Community building involves identifying and engaging target user communities through appropriate channels including academic conferences, industry forums, and social media platforms that reach potential benchmark users and contributors.

Launch planning coordinates benchmark announcement and initial promotion through strategic timing, compelling messaging, and demonstration materials that generate interest and early adoption among target audiences.

Partnership development establishes relationships with relevant organizations, research groups, and industry partners that can provide validation, promotion, and ongoing support for benchmark adoption and development.

Contribution and Collaboration Frameworks

Open source strategies enable community contributions to benchmark development through clear contribution guidelines, code review processes, and recognition systems that encourage ongoing community involvement and improvement.

Collaborative evaluation initiatives facilitate joint evaluation efforts among multiple organizations through shared evaluation protocols, result sharing agreements, and collaborative analysis projects that enhance benchmark value and adoption.

Academic partnerships establish relationships with research institutions that can provide validation, extension, and ongoing development support through student projects, research collaborations, and publication opportunities.

Governance and Sustainability

Governance structures establish clear decision-making processes, community representation, and conflict resolution mechanisms that ensure fair and effective benchmark management as the community grows and evolves.

Sustainability planning addresses long-term benchmark maintenance and development through funding strategies, organizational support, and succession planning that ensure continued benchmark availability and improvement.

Evolution management provides frameworks for benchmark updates, extensions, and improvements while maintaining backward compatibility and community consensus on development directions and priorities.

Quality Assurance and Validation

Testing and Validation Procedures

System testing encompasses comprehensive testing of all benchmark components including unit testing, integration testing, and end-to-end testing that ensure system reliability and correctness across different usage scenarios and conditions.

Evaluation validation involves systematic verification of evaluation procedures, metric calculations, and result accuracy through comparison with known benchmarks, expert review, and statistical validation techniques.

User acceptance testing engages target users in testing benchmark functionality, usability, and value proposition through structured testing programs that identify issues and improvement opportunities before full deployment.

Performance Benchmarking and Optimization

Performance profiling identifies system bottlenecks and optimization opportunities through systematic analysis of computational performance, memory usage, and network utilization across different evaluation scenarios and load conditions.

Optimization implementation addresses identified performance issues through code optimization, infrastructure improvements, and architectural refinements that enhance system efficiency and user experience.

Capacity planning projects future resource requirements based on anticipated growth in users, evaluations, and system complexity through modeling and analysis that inform infrastructure investment decisions.

Continuous Improvement Processes

Feedback collection establishes systematic processes for gathering user feedback, identifying improvement opportunities, and prioritizing development efforts through surveys, interviews, and usage analytics that guide benchmark evolution.

Iterative development implements regular update cycles that incorporate user feedback, address identified issues, and add new capabilities through structured development processes that maintain system stability while enabling continuous improvement.

Community engagement maintains ongoing dialogue with benchmark users and contributors through regular communication, community meetings, and collaborative planning processes that ensure benchmark development remains aligned with community needs and priorities.

Conclusion and Best Practices

Building successful custom LLM benchmarks requires careful attention to both technical implementation and community engagement considerations. The most effective benchmarks combine rigorous evaluation methodology with user-friendly interfaces and strong community support that drives adoption and ongoing improvement.

Key success factors include:

- Clear objectives and scope definition that guide all development decisions

- Robust technical architecture that supports scalability and reliability requirements

- High-quality datasets and evaluation procedures that ensure meaningful and fair assessment

- User-centered design that makes benchmarks accessible and valuable to target audiences

- Strong community engagement that drives adoption and ongoing development support

Implementation best practices emphasize iterative development approaches that enable early feedback incorporation, comprehensive testing that ensures system reliability, and ongoing monitoring that maintains benchmark quality and relevance over time.

The future of custom LLM benchmarking will likely emphasize more sophisticated evaluation approaches, better integration with existing tools and workflows, and enhanced collaboration features that support community-driven development and improvement. Organizations that invest in building high-quality custom benchmarks will be well-positioned to drive innovation in their specific domains while contributing to the broader advancement of LLM evaluation practices.

Success in custom benchmark development requires balancing technical excellence with practical utility, ensuring that benchmarks not only provide accurate and reliable evaluation but also deliver genuine value to users and contribute meaningfully to the advancement of language model development and deployment practices.

Table of Contents

Related Posts

The Complete Guide to LLM Benchmarking: Everything You Need to Know in 2025

Master LLM benchmarking with our comprehensive guide covering evaluation methodologies, best practices, and implementation strategies for 2025.

LLM Benchmark Results Analysis: How to Interpret Performance Metrics Like a Pro

Master the art of interpreting LLM benchmark results with our expert guide to performance metrics, statistical analysis, and decision-making frameworks.