benchLM.ai

LLM Benchmark Leaderboard

The Complete Guide to LLM Benchmarking: Everything You Need to Know in 2025

Master LLM benchmarking with our comprehensive guide covering evaluation methodologies, best practices, and implementation strategies for 2025.

Glevd

@glevdLarge Language Model (LLM) benchmarking has become the cornerstone of artificial intelligence evaluation, providing systematic approaches to assess model capabilities, performance, and reliability across diverse tasks and applications. As organizations increasingly rely on LLMs for critical business functions, understanding how to properly evaluate and compare these models has never been more important.

The landscape of LLM benchmarking encompasses multiple evaluation methodologies, from academic research frameworks to commercial assessment platforms, each designed to measure different aspects of model performance including accuracy, efficiency, safety, and practical utility. This comprehensive guide explores the fundamental concepts, methodologies, and best practices that define effective LLM benchmarking in 2025.

Understanding LLM Benchmarking Fundamentals

What is LLM Benchmarking?

LLM benchmarking refers to the systematic evaluation of large language models using standardized tests, datasets, and metrics to assess their performance across various tasks and capabilities. Unlike traditional software testing, LLM benchmarking must account for the probabilistic nature of neural networks, the subjective quality of natural language output, and the diverse range of capabilities that modern language models possess.

The primary purpose of LLM benchmarking is to provide objective, comparable measurements of model performance that enable informed decision-making in model selection, deployment, and optimization. Effective benchmarking serves multiple stakeholders including researchers developing new models, organizations selecting models for deployment, and users seeking to understand model capabilities and limitations.

Contemporary LLM benchmarking encompasses multiple evaluation dimensions including task-specific performance, general reasoning capabilities, factual accuracy, safety considerations, and computational efficiency. This multi-dimensional approach reflects the complexity of modern language models and the diverse requirements of real-world applications.

Historical Evolution of LLM Evaluation

The evolution of LLM benchmarking parallels the development of language models themselves, beginning with simple perplexity measurements for early language models and evolving into sophisticated multi-task evaluation frameworks for contemporary LLMs. Early evaluation approaches focused primarily on linguistic competence, measuring models' ability to predict next words or complete sentences with grammatical accuracy.

The introduction of transformer architectures and the emergence of large-scale pre-trained models necessitated more comprehensive evaluation approaches that could assess reasoning, knowledge, and task-specific capabilities. Benchmarks like GLUE (General Language Understanding Evaluation) and SuperGLUE established standardized evaluation protocols that enabled systematic comparison across different models and architectures.

Recent developments in LLM benchmarking reflect the growing sophistication of language models, with evaluation frameworks now incorporating assessments of mathematical reasoning, code generation, creative writing, and alignment with human preferences. The emergence of human evaluation platforms and preference-based ranking systems represents a significant evolution toward more nuanced and practical assessment approaches.

Core Principles of Effective Benchmarking

Effective LLM benchmarking relies on several fundamental principles that ensure evaluation reliability, validity, and practical utility. Reproducibility stands as a cornerstone principle, requiring that benchmark results can be consistently replicated across different evaluation runs and environments. This necessitates careful control of random seeds, evaluation procedures, and environmental factors that might influence model performance.

Comprehensiveness represents another critical principle, demanding that benchmarks assess multiple aspects of model capability rather than focusing on narrow task-specific performance. Modern benchmarking frameworks incorporate diverse task types, difficulty levels, and evaluation criteria to provide holistic assessment of model capabilities and limitations.

Fairness and bias mitigation constitute essential principles that ensure benchmarks provide equitable evaluation across different model types, training approaches, and demographic considerations. This includes careful attention to dataset composition, evaluation metric selection, and interpretation of results to avoid systematic biases that might favor particular models or approaches.

Major Benchmarking Approaches and Methodologies

Academic Evaluation Frameworks

Academic benchmarking frameworks provide rigorous, research-oriented evaluation approaches that emphasize scientific validity and comprehensive assessment of model capabilities. These frameworks typically incorporate multiple tasks, standardized datasets, and peer-reviewed evaluation protocols that enable systematic comparison across different models and research approaches.

The Holistic Evaluation of Language Models (HELM) framework, developed by Stanford University, exemplifies comprehensive academic evaluation by assessing models across multiple dimensions including accuracy, calibration, robustness, fairness, bias, toxicity, and efficiency. HELM's systematic approach provides detailed analysis of model strengths and weaknesses while identifying areas for improvement and further research.

Academic frameworks often prioritize transparency and reproducibility, providing detailed documentation of evaluation procedures, datasets, and analysis methods that enable independent verification and extension of results. This emphasis on scientific rigor makes academic benchmarks particularly valuable for research purposes and long-term model development efforts.

Commercial Assessment Platforms

Commercial benchmarking platforms focus on practical evaluation needs of organizations deploying LLMs in business applications, emphasizing metrics that directly relate to operational requirements and user satisfaction. These platforms typically provide user-friendly interfaces, automated evaluation capabilities, and business-oriented reporting that supports decision-making in commercial contexts.

Platforms like Artificial Analysis provide comprehensive performance comparisons across multiple LLM providers, focusing on metrics that matter for practical deployment including response quality, speed, cost-effectiveness, and reliability. These commercial platforms often incorporate real-world usage scenarios and business-relevant evaluation criteria that complement academic benchmarking approaches.

Commercial benchmarking platforms frequently offer continuous monitoring capabilities that track model performance over time, enabling organizations to identify performance degradation, compare different model versions, and optimize their LLM deployments based on actual usage patterns and requirements.

Community-Driven Evaluation Initiatives

Community-driven benchmarking initiatives leverage collective expertise and diverse perspectives to create comprehensive evaluation frameworks that reflect real-world usage patterns and requirements. These initiatives often incorporate crowdsourced evaluation, community feedback, and collaborative development approaches that enhance benchmark quality and relevance.

The Chatbot Arena platform represents a successful community-driven approach that uses human preference voting to rank LLMs based on conversational quality and user satisfaction. This approach provides valuable insights into practical model performance that complement traditional automated evaluation metrics.

Community initiatives often excel at identifying emerging evaluation needs, incorporating diverse use cases, and maintaining relevance to evolving user requirements. The collaborative nature of these initiatives enables rapid adaptation to new model capabilities and changing application requirements.

Practical Implementation of LLM Benchmarking

Setting Up Evaluation Environments

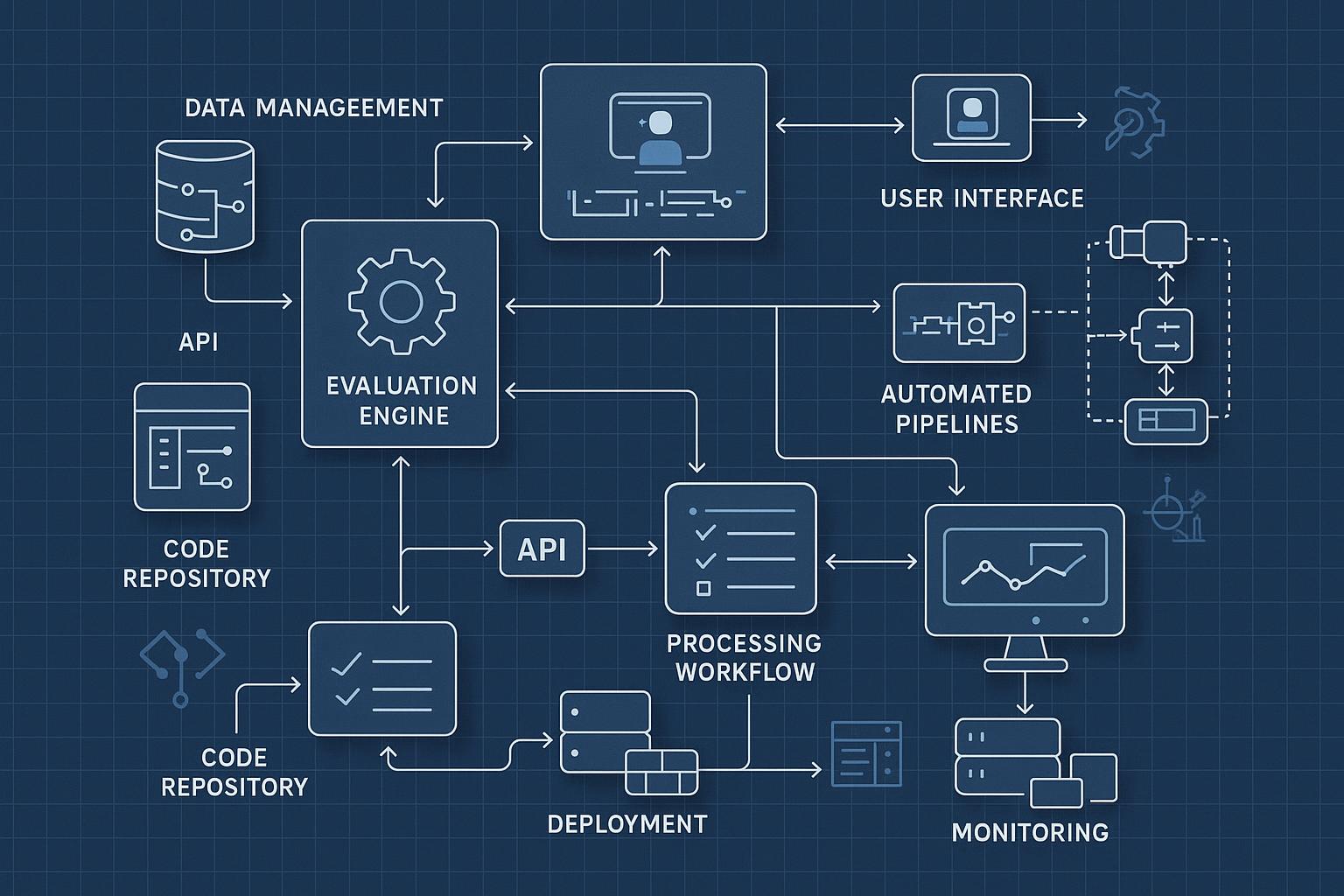

Successful LLM benchmarking requires careful setup of evaluation environments that ensure consistent, reliable, and reproducible results. This includes establishing standardized hardware configurations, software environments, and evaluation procedures that minimize variability and enable fair comparison across different models and evaluation runs.

Hardware considerations include GPU specifications, memory requirements, and computational resources that can significantly impact evaluation results, particularly for performance and efficiency measurements. Standardizing hardware configurations or accounting for hardware differences in result interpretation ensures fair comparison across different evaluation setups.

Software environment setup involves configuring appropriate frameworks, libraries, and dependencies that support consistent model loading, inference, and evaluation. Version control of software components and careful documentation of environment specifications enable reproducible evaluation and facilitate troubleshooting of evaluation issues.

Selecting Appropriate Benchmarks

Benchmark selection requires careful consideration of evaluation objectives, model characteristics, and intended applications to ensure that chosen benchmarks provide relevant and meaningful assessment of model capabilities. Different benchmarks emphasize different aspects of model performance, making selection a critical factor in evaluation effectiveness.

Task-specific benchmarks provide detailed assessment of model performance in particular domains or applications, such as mathematical reasoning, code generation, or creative writing. These specialized benchmarks offer deep insights into specific capabilities but may not provide comprehensive assessment of overall model utility.

General-purpose benchmarks assess broad model capabilities across multiple tasks and domains, providing holistic evaluation that supports general model comparison and selection decisions. These benchmarks offer comprehensive coverage but may lack the depth needed for specialized application assessment.

Interpreting Benchmark Results

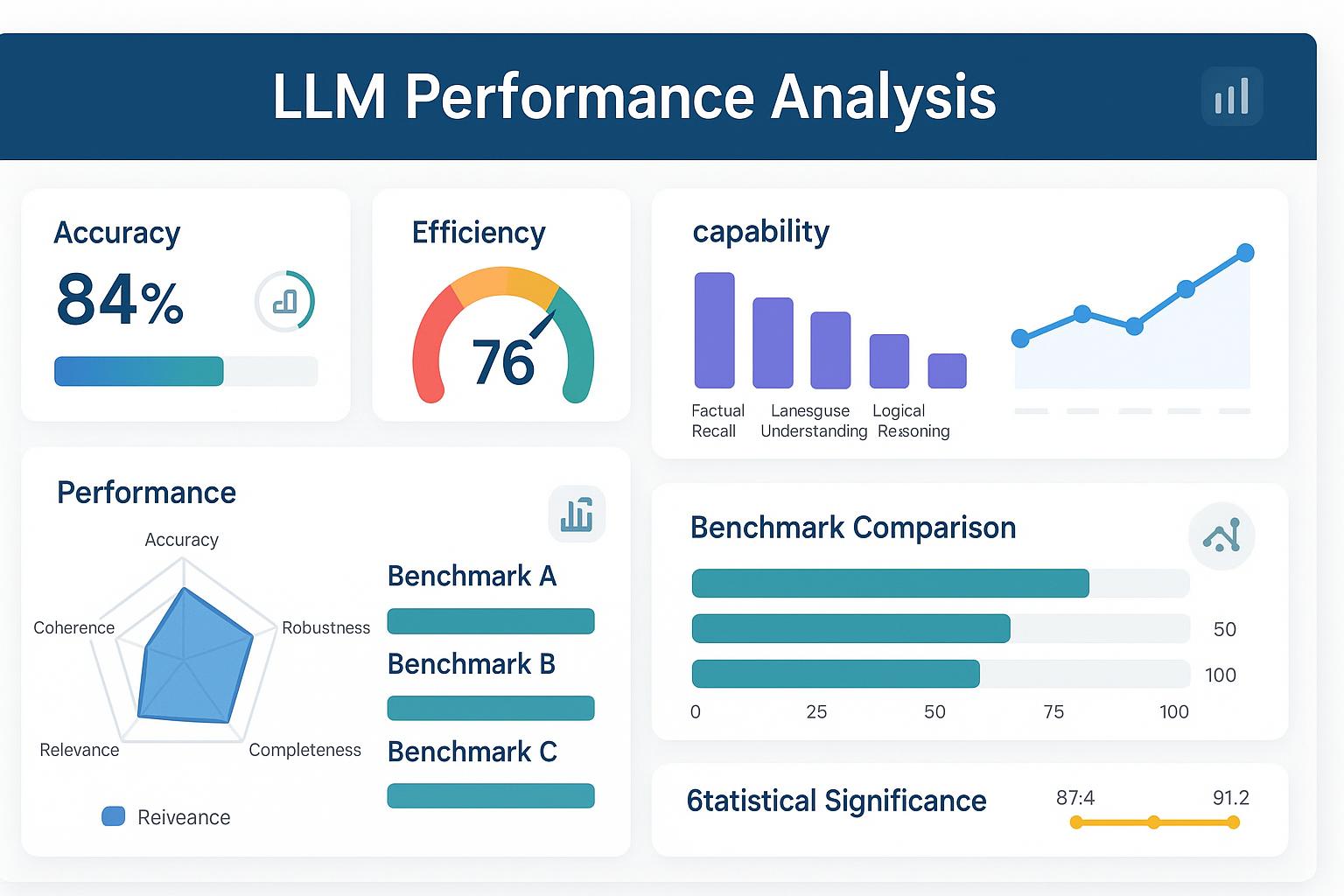

Proper interpretation of benchmark results requires understanding of evaluation methodologies, statistical significance, and practical implications of performance differences. Raw benchmark scores must be contextualized within appropriate frameworks that account for evaluation uncertainty, task difficulty, and real-world relevance.

Statistical significance analysis helps distinguish meaningful performance differences from random variation, particularly important when comparing models with similar performance levels. Confidence intervals, significance tests, and effect size measurements provide quantitative frameworks for assessing the reliability and importance of observed performance differences.

Practical significance assessment considers whether observed performance differences translate to meaningful improvements in real-world applications. This requires understanding of task requirements, user expectations, and operational constraints that influence the practical value of benchmark performance improvements.

Advanced Benchmarking Considerations

Specialized Domain Evaluation

Domain-specific benchmarking addresses the unique requirements and challenges of evaluating LLMs for specialized applications such as healthcare, legal, financial, or scientific domains. These evaluations require specialized datasets, domain expertise, and evaluation criteria that reflect the specific requirements and constraints of targeted applications.

Healthcare LLM evaluation must consider medical accuracy, safety implications, regulatory compliance, and integration with clinical workflows. Specialized medical benchmarks assess knowledge of medical concepts, diagnostic reasoning, treatment recommendations, and adherence to medical ethics and safety standards.

Legal domain evaluation focuses on legal reasoning, case analysis, regulatory compliance, and ethical considerations specific to legal applications. Legal benchmarks assess understanding of legal concepts, ability to analyze case law, and adherence to professional standards and ethical requirements.

Bias and Fairness Assessment

Bias evaluation represents a critical component of comprehensive LLM benchmarking, assessing whether models exhibit unfair or discriminatory behavior across different demographic groups, cultural contexts, or application scenarios. This evaluation requires specialized datasets, metrics, and analysis approaches that can identify and quantify various forms of bias.

Demographic bias assessment examines whether models provide equitable performance and treatment across different demographic groups including gender, race, age, and cultural background. This requires careful analysis of model outputs, evaluation datasets, and performance metrics to identify systematic disparities in model behavior.

Cultural bias evaluation assesses whether models exhibit preferences or assumptions that favor particular cultural perspectives or worldviews. This includes analysis of cultural references, value judgments, and contextual interpretations that might reflect cultural biases in training data or model development processes.

Safety and Alignment Evaluation

Safety evaluation assesses whether LLMs behave in ways that are safe, beneficial, and aligned with human values and intentions. This includes evaluation of harmful content generation, misuse potential, and adherence to ethical guidelines and safety standards.

Harmful content assessment evaluates models' tendency to generate content that could be harmful, offensive, or dangerous including hate speech, violence, illegal activities, or misinformation. Safety benchmarks test models' ability to refuse inappropriate requests while maintaining helpful and informative responses to legitimate queries.

Alignment evaluation assesses whether models behave in accordance with human values and intentions, including helpfulness, honesty, and harmlessness. This evaluation often incorporates human preference data and value-based assessment criteria that reflect desired model behavior and ethical considerations.

Future Trends and Emerging Developments

Automated Evaluation Systems

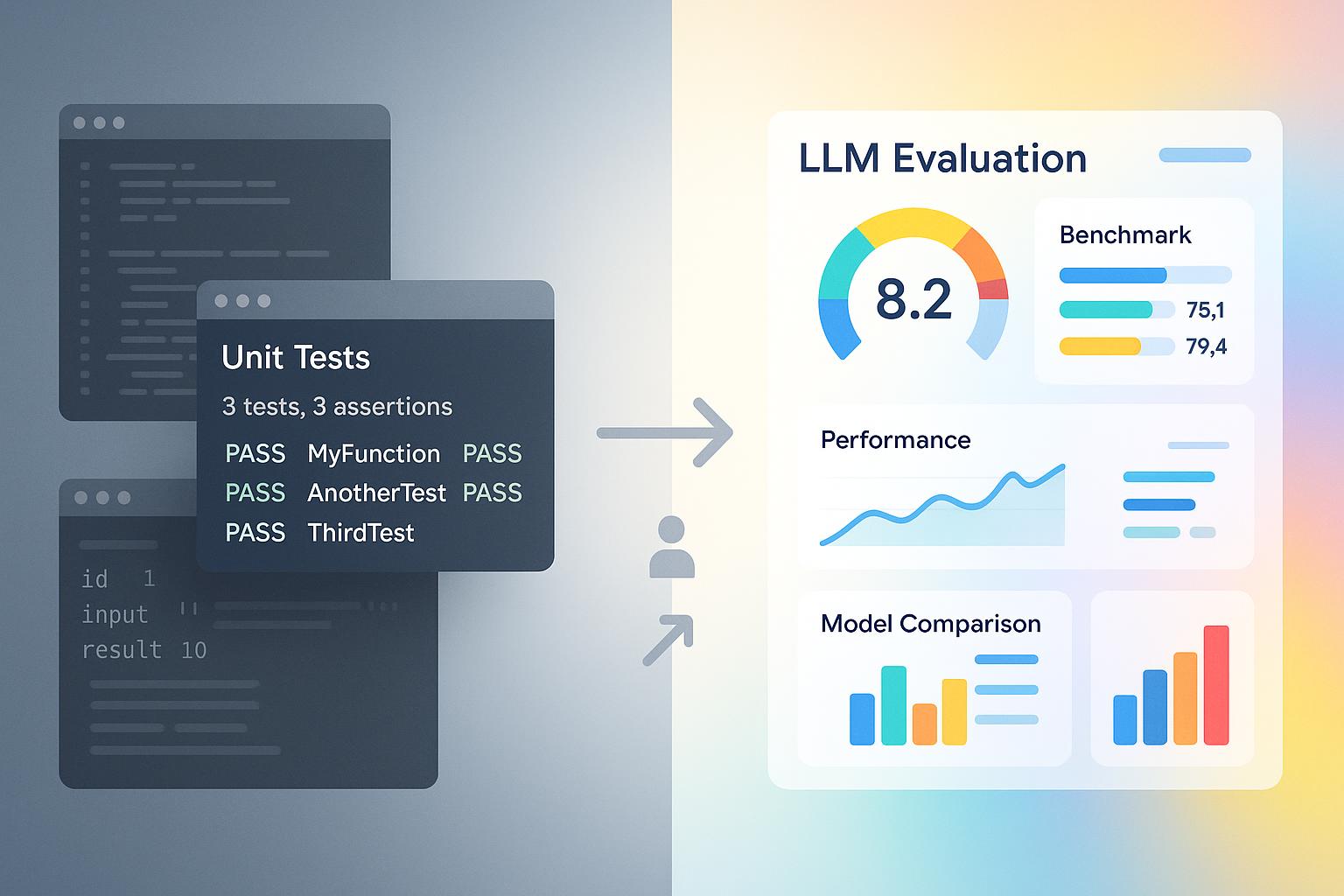

The development of automated evaluation systems represents a significant trend toward more scalable, consistent, and cost-effective LLM benchmarking. These systems leverage AI-powered evaluation approaches that can assess model outputs with human-like judgment while providing the scalability and consistency advantages of automated systems.

AI-powered evaluation systems use advanced language models to assess the quality, accuracy, and appropriateness of other models' outputs, potentially providing more nuanced evaluation than traditional automated metrics while maintaining scalability advantages over human evaluation approaches.

Continuous evaluation systems enable ongoing assessment of model performance in production environments, providing real-time monitoring of model behavior and performance degradation. These systems support proactive model management and optimization based on actual usage patterns and performance trends.

Multimodal Evaluation Approaches

The emergence of multimodal language models necessitates evaluation approaches that can assess performance across multiple modalities including text, images, audio, and video. Multimodal benchmarking requires specialized datasets, evaluation metrics, and analysis approaches that can assess cross-modal understanding and generation capabilities.

Vision-language evaluation assesses models' ability to understand and generate content that combines textual and visual information, including image captioning, visual question answering, and multimodal reasoning tasks. These evaluations require careful consideration of both textual and visual quality criteria.

Audio-language evaluation focuses on models' ability to process and generate content involving speech, music, and other audio modalities. This includes assessment of speech recognition, audio generation, and cross-modal understanding between audio and text modalities.

Real-World Performance Assessment

The growing emphasis on real-world performance assessment reflects recognition that benchmark performance may not always translate to practical utility in actual deployment scenarios. Real-world evaluation approaches focus on assessing model performance in authentic usage contexts with realistic constraints and requirements.

User satisfaction evaluation incorporates feedback from actual users of LLM systems, providing insights into practical utility, user experience, and real-world effectiveness that complement traditional benchmark metrics. This approach emphasizes practical value over theoretical performance.

Production performance monitoring assesses model behavior in live deployment environments, tracking metrics such as user engagement, task completion rates, and business impact that reflect actual value delivery rather than benchmark performance.

Conclusion and Best Practices

Effective LLM benchmarking requires a comprehensive approach that combines multiple evaluation methodologies, considers diverse stakeholder needs, and maintains focus on practical utility and real-world relevance. The most successful benchmarking programs incorporate both automated and human evaluation approaches while addressing specialized requirements and emerging evaluation challenges.

Best practices for LLM benchmarking include establishing clear evaluation objectives, selecting appropriate benchmarks and metrics, ensuring reproducible evaluation procedures, and interpreting results within appropriate contexts that account for statistical significance and practical relevance. Regular updates to evaluation approaches and benchmarks help maintain relevance as models and applications continue to evolve.

The future of LLM benchmarking will likely emphasize more sophisticated evaluation approaches that better capture real-world utility, incorporate multimodal capabilities, and address emerging challenges in AI safety and alignment. Organizations and researchers who invest in comprehensive, forward-looking evaluation capabilities will be best positioned to navigate the evolving landscape of large language model development and deployment.

As the field continues to mature, the integration of academic rigor, commercial practicality, and community insights will drive the development of more effective and comprehensive benchmarking approaches that serve the diverse needs of the LLM ecosystem while supporting responsible and beneficial AI development.

Table of Contents

Related Posts

Building Your Own LLM Benchmark: A Step-by-Step Implementation Guide

Learn how to create custom LLM benchmarking systems with our comprehensive implementation guide covering architecture, development, and deployment strategies.

LLM Benchmark Results Analysis: How to Interpret Performance Metrics Like a Pro

Master the art of interpreting LLM benchmark results with our expert guide to performance metrics, statistical analysis, and decision-making frameworks.