benchLM.ai

LLM Benchmark Leaderboard

LLM Benchmark Results Analysis: How to Interpret Performance Metrics Like a Pro

Master the art of interpreting LLM benchmark results with our expert guide to performance metrics, statistical analysis, and decision-making frameworks.

Glevd

@glevdUnderstanding how to properly interpret LLM benchmark results represents one of the most critical skills for anyone working with large language models, whether for research, business applications, or technical decision-making. The complexity of modern benchmarking systems, combined with the nuanced nature of language model performance, creates numerous opportunities for misinterpretation that can lead to poor model selection and deployment decisions.

Professional interpretation of benchmark results requires deep understanding of statistical concepts, evaluation methodologies, and the practical implications of different performance measurements. This comprehensive guide provides the analytical framework and practical tools needed to extract meaningful insights from benchmark data while avoiding common interpretation pitfalls that plague both novice and experienced practitioners.

Fundamental Concepts in Performance Measurement

Understanding Benchmark Architectures

Modern LLM benchmarks employ sophisticated architectures that combine multiple evaluation approaches, metrics, and analysis techniques to provide comprehensive assessment of model capabilities. Understanding these architectural components is essential for proper interpretation of benchmark results and assessment of their relevance to specific use cases and requirements.

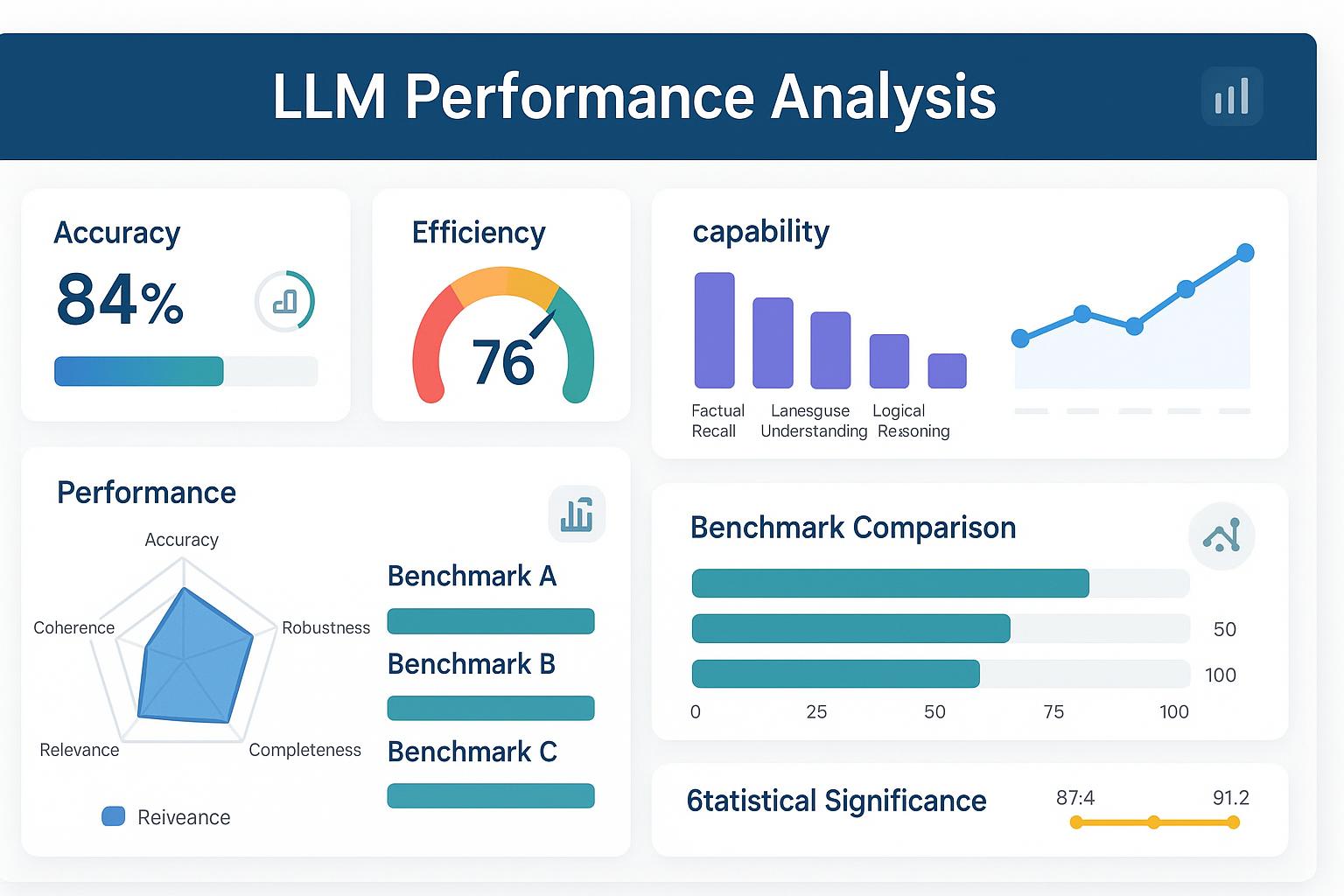

Benchmark architectures typically incorporate multiple evaluation dimensions including accuracy measurements, efficiency assessments, and capability evaluations that address different aspects of model performance. Each dimension employs specific metrics and analysis approaches that require different interpretation strategies and contextual understanding.

The relationship between different benchmark components affects overall score calculation and interpretation, with some benchmarks using weighted averages, others employing composite scoring systems, and still others providing separate scores for different evaluation dimensions. Understanding these relationships is crucial for accurate interpretation of overall performance assessments.

Statistical Foundations of Benchmark Analysis

Statistical analysis forms the foundation of reliable benchmark interpretation, providing quantitative frameworks for assessing the significance, reliability, and practical importance of observed performance differences. Professional interpretation requires understanding of confidence intervals, significance testing, and effect size measurements that distinguish meaningful differences from random variation.

Confidence intervals provide quantitative measures of uncertainty in benchmark results, indicating the range of values within which true performance likely falls. Narrow confidence intervals suggest precise measurements, while wide intervals indicate greater uncertainty that affects the reliability of performance comparisons and selection decisions.

Statistical significance testing helps determine whether observed performance differences represent genuine model differences rather than random variation in evaluation results. However, statistical significance does not automatically imply practical significance, requiring additional analysis to assess whether differences matter for real-world applications.

Performance Metric Categories and Interpretation

LLM benchmark metrics fall into several distinct categories, each requiring specific interpretation approaches and contextual understanding. Accuracy metrics measure correctness of model outputs, efficiency metrics assess computational requirements, and capability metrics evaluate specific skills or knowledge domains.

Accuracy metrics include traditional measures like precision, recall, and F1 scores, as well as LLM-specific metrics like BLEU scores for text generation and human evaluation ratings for subjective quality assessment. Each metric type provides different insights into model performance and requires appropriate interpretation within its specific context and limitations.

Efficiency metrics encompass response time, throughput, computational cost, and resource utilization measurements that affect practical deployment considerations. These metrics often involve trade-offs with accuracy metrics, requiring balanced interpretation that considers both performance quality and operational requirements.

Advanced Interpretation Techniques

Comparative Analysis Methodologies

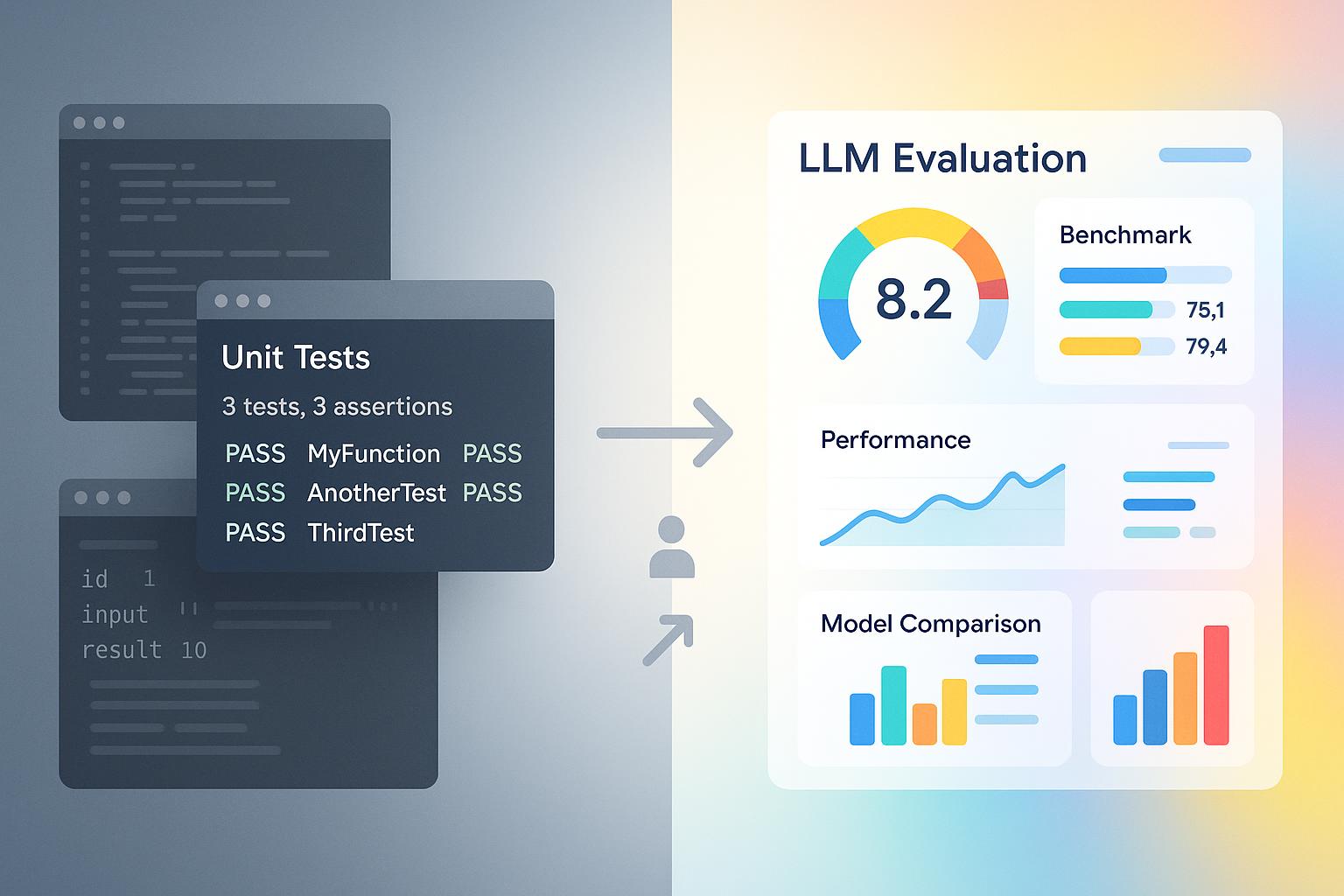

Effective benchmark interpretation often requires comparative analysis that examines performance differences across multiple models, evaluation conditions, or time periods. Professional comparative analysis employs systematic approaches that account for statistical significance, practical importance, and contextual factors that influence comparison validity.

Pairwise comparison analysis examines performance differences between specific model pairs, providing detailed insights into relative strengths and weaknesses that inform selection decisions. This analysis requires careful attention to statistical significance and effect sizes that indicate whether observed differences represent meaningful performance advantages.

Multi-model comparison analysis examines performance patterns across larger sets of models, identifying performance clusters, outliers, and systematic patterns that provide insights into model characteristics and evaluation reliability. This analysis often employs visualization techniques and statistical clustering approaches that reveal underlying performance structures.

Contextual Performance Assessment

Contextual assessment considers how benchmark performance relates to specific use cases, deployment scenarios, and operational requirements that affect practical model utility. This analysis goes beyond raw performance scores to examine whether benchmark results predict success in targeted applications and environments.

Task-specific performance analysis examines how models perform on evaluation tasks that closely match intended applications, providing insights into practical utility that may not be captured by general benchmark scores. This analysis requires understanding of task characteristics and their relationship to real-world requirements.

Domain-specific performance assessment considers how models perform within particular knowledge domains or application areas, accounting for specialized requirements and constraints that affect model suitability. This analysis often requires domain expertise and specialized evaluation criteria that complement general benchmark results.

Bias and Fairness Analysis

Bias analysis represents a critical component of professional benchmark interpretation, examining whether performance differences reflect genuine capability differences or systematic biases in evaluation approaches, datasets, or metrics. This analysis requires specialized techniques and frameworks that can identify and quantify various forms of evaluation bias.

Demographic bias analysis examines whether models exhibit differential performance across different demographic groups or cultural contexts, identifying potential fairness issues that affect model suitability for diverse applications and user populations. This analysis requires careful examination of evaluation datasets and performance breakdowns across different demographic categories.

Methodological bias analysis assesses whether benchmark design choices, metric selection, or evaluation procedures systematically favor particular model types or approaches. This analysis requires understanding of benchmark methodologies and their potential impacts on performance measurement and comparison.

Practical Application of Interpretation Skills

Model Selection Decision Frameworks

Professional model selection requires systematic decision frameworks that integrate benchmark results with business requirements, technical constraints, and operational considerations. These frameworks provide structured approaches to translating performance data into actionable selection decisions while accounting for uncertainty and trade-offs.

Multi-criteria decision analysis provides quantitative frameworks for combining multiple performance dimensions, business requirements, and constraint considerations into systematic selection processes. This approach enables objective comparison of models across multiple evaluation criteria while maintaining transparency in decision-making processes.

Risk-adjusted selection frameworks account for uncertainty in benchmark results and potential risks associated with different model choices. These frameworks incorporate confidence intervals, performance variability, and deployment risk assessments that support robust selection decisions under uncertainty.

Performance Monitoring and Optimization

Benchmark interpretation skills extend beyond initial model selection to ongoing performance monitoring and optimization activities that maintain model effectiveness over time. Professional monitoring requires understanding of performance trends, degradation patterns, and optimization opportunities that emerge from continuous evaluation.

Trend analysis examines performance changes over time, identifying patterns of improvement, degradation, or stability that inform maintenance and optimization decisions. This analysis requires statistical techniques for trend detection and change point analysis that distinguish genuine performance changes from random variation.

Comparative monitoring tracks performance relative to other models or baseline systems, providing context for absolute performance measurements and identifying opportunities for model upgrades or optimization. This analysis requires ongoing benchmark evaluation and systematic comparison approaches.

Common Interpretation Pitfalls and How to Avoid Them

Statistical Misinterpretation Issues

Statistical misinterpretation represents one of the most common sources of errors in benchmark analysis, leading to incorrect conclusions about model performance and inappropriate selection decisions. Understanding and avoiding these pitfalls is essential for reliable benchmark interpretation and decision-making.

Significance versus practical importance confusion occurs when statistically significant differences are assumed to be practically meaningful, or when non-significant differences are dismissed despite potential practical importance. Professional interpretation requires assessment of both statistical and practical significance using appropriate effect size measures and contextual analysis.

Multiple comparison problems arise when numerous pairwise comparisons are conducted without appropriate statistical corrections, leading to inflated error rates and false positive conclusions. Professional analysis employs appropriate correction methods and structured comparison approaches that maintain statistical validity.

Overgeneralization and Context Errors

Overgeneralization occurs when benchmark results are applied beyond their appropriate scope, leading to incorrect assumptions about model performance in different contexts or applications. Professional interpretation requires careful attention to benchmark scope, evaluation conditions, and generalizability limitations.

Benchmark-to-application mismatch represents a common overgeneralization error where benchmark performance is assumed to predict performance in significantly different application contexts. Professional interpretation requires analysis of task similarity, evaluation condition relevance, and domain-specific factors that affect generalizability.

Temporal overgeneralization occurs when benchmark results are assumed to remain valid over extended time periods without accounting for model updates, evaluation changes, or evolving requirements. Professional interpretation incorporates temporal considerations and update strategies that maintain result relevance.

Vendor and Marketing Claim Validation

Commercial benchmark claims require careful validation and independent analysis to distinguish genuine performance advantages from marketing positioning and selective result presentation. Professional interpretation employs systematic validation approaches that assess claim accuracy and completeness.

Selective result presentation occurs when vendors highlight favorable benchmark results while omitting less favorable outcomes, creating misleading impressions of overall model performance. Professional analysis requires comprehensive result examination and independent validation of vendor claims.

Benchmark gaming represents a more serious issue where models are specifically optimized for benchmark performance rather than general capability, leading to inflated scores that don't reflect real-world utility. Professional interpretation requires understanding of potential gaming strategies and validation through diverse evaluation approaches.

Advanced Tools and Techniques

Automated Analysis Systems

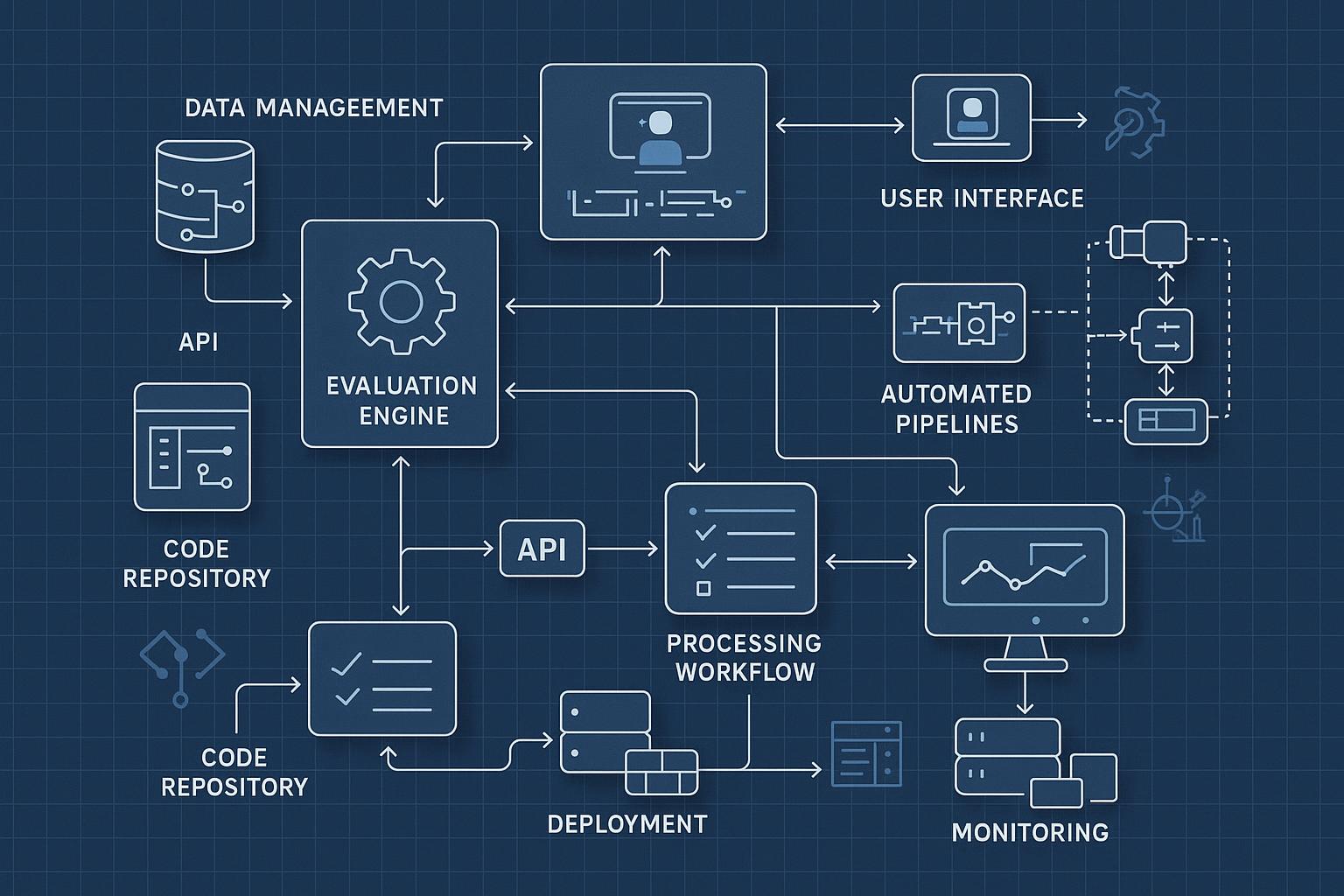

Automated analysis systems provide scalable approaches to benchmark interpretation that can process large volumes of evaluation data while maintaining consistency and objectivity in analysis approaches. These systems employ statistical analysis, machine learning, and visualization techniques that enhance interpretation capabilities.

Statistical analysis automation employs computational tools that perform significance testing, confidence interval calculation, and effect size analysis across large benchmark datasets. These tools enable comprehensive analysis that would be impractical through manual approaches while maintaining statistical rigor.

Visualization automation creates standardized charts, graphs, and dashboards that facilitate interpretation of complex benchmark results through visual analysis. These tools enable rapid identification of patterns, outliers, and relationships that might be missed through numerical analysis alone.

Ensemble Interpretation Approaches

Ensemble interpretation combines results from multiple benchmarks, evaluation approaches, or analysis techniques to provide more robust and comprehensive assessment of model performance. These approaches reduce reliance on individual benchmark results while providing broader perspective on model capabilities.

Multi-benchmark analysis combines results from different evaluation frameworks to provide comprehensive assessment that accounts for different evaluation perspectives and methodological approaches. This analysis requires careful attention to benchmark compatibility and result integration techniques.

Cross-validation interpretation employs multiple evaluation approaches or datasets to validate benchmark results and assess their robustness across different evaluation conditions. This approach provides confidence assessment and identifies potential evaluation artifacts that might affect result reliability.

Future Directions in Benchmark Interpretation

Emerging Analysis Methodologies

The evolution of LLM capabilities and evaluation approaches drives development of new interpretation methodologies that address emerging challenges and opportunities in benchmark analysis. These methodologies incorporate advances in statistical analysis, machine learning, and domain-specific evaluation approaches.

Causal analysis techniques examine causal relationships between model characteristics and performance outcomes, providing deeper insights into factors that drive performance differences and optimization opportunities. These techniques require sophisticated statistical approaches and careful experimental design.

Uncertainty quantification methods provide more sophisticated approaches to assessing and communicating uncertainty in benchmark results, enabling better decision-making under uncertainty and more accurate risk assessment in model selection and deployment.

Integration with Real-World Performance

The growing emphasis on real-world performance validation drives development of interpretation approaches that connect benchmark results with actual deployment outcomes and user satisfaction measures. These approaches provide validation of benchmark relevance and practical utility.

Production performance correlation analysis examines relationships between benchmark results and actual deployment performance, identifying which benchmark metrics best predict real-world success and user satisfaction. This analysis enables more effective benchmark selection and interpretation strategies.

User feedback integration incorporates actual user experiences and satisfaction measures into benchmark interpretation frameworks, providing practical validation of performance assessments and identification of gaps between benchmark and real-world performance.

Conclusion and Professional Development

Mastering LLM benchmark interpretation requires ongoing development of statistical analysis skills, domain knowledge, and practical experience with different evaluation frameworks and methodologies. Professional competence in this area provides significant value for organizations deploying LLMs and researchers developing new models and evaluation approaches.

The most effective interpretation practitioners combine strong statistical foundations with deep understanding of LLM capabilities, evaluation methodologies, and practical deployment considerations. This combination enables accurate analysis, appropriate contextualization, and actionable recommendations that support successful model selection and deployment decisions.

Key takeaways for professional development include:

- Statistical rigor: Maintain strong foundations in statistical analysis and significance testing

- Contextual awareness: Always consider the practical implications and limitations of benchmark results

- Comprehensive analysis: Use multiple evaluation approaches and validation techniques

- Continuous learning: Stay updated with emerging methodologies and evaluation frameworks

The future of benchmark interpretation will likely emphasize more sophisticated analysis approaches, better integration with real-world performance data, and enhanced communication techniques that make complex analysis results accessible to diverse stakeholder audiences. Professionals who develop these advanced capabilities will provide increasing value as LLM deployment becomes more widespread and sophisticated.

Table of Contents

Related Posts

Building Your Own LLM Benchmark: A Step-by-Step Implementation Guide

Learn how to create custom LLM benchmarking systems with our comprehensive implementation guide covering architecture, development, and deployment strategies.

The Complete Guide to LLM Benchmarking: Everything You Need to Know in 2025

Master LLM benchmarking with our comprehensive guide covering evaluation methodologies, best practices, and implementation strategies for 2025.